Linux MIDI: A Brief Survey, Part 4

In this installment of our tour of Linux MIDI software, we look at some experimental MIDI music-making environments. I've divided this class of software into command-line and GUI-based applications. Regardless of interface, the user is expected to employ a more than average amount of brainpower to get the best results from this software. With that warning in mind, let's first look at some language-based environments designed for the experimental MIDI musician.

Craig Stuart Sapp's Improv is designed for an interactive performance-oriented environment for experimentally minded MIDI musicians. The system currently is available as a package that includes a library of C++ classes optimized for MIDI I/O and a set of examples demonstrating the functions and capabilities of those classes.

Improv controls real-time MIDI communication between a host computer and an external synthesizer. In a typical program, the computer receives MIDI input from the synthesizer, immediately alters that input in some preprogrammed manner and sends the altered data stream to the specified MIDI output port. Some Improv examples have the computer produce a MIDI output stream that can be altered by the external keyboard, creating interesting possibilities for a musical "dialog" with the program.

The following small program inverts the note-numbers of a MIDI keyboard, 0-127. Thus, if a player plays the series of pitches C-D-E, two whole steps upwards, the Improv program converts the series to C-Bb-Ab, two whole steps downwards, at the same time. With MIDI Thru enabled, the normal and altered lines produce sound simultaneously. The code is from the set of examples provided in the Improv package. For brevity's sake, I've edited Craig's comments, but the entire listing is available on-line here.

// An Improv program that inverts the incoming MIDI note numbers.

//

// Header for synthesizer I/O programming

#include "synthImprov.h"

/*------------- beginning of improvization algorithms ------------*/

/*- ------------- maintenance algorithms ------------------------*/

// description -- Put a description of the program and how to use it here.

void description(void) {

cout << "plays music backwards" << endl;

}

// initialization -- Put items here which need to be initialized at the

// beginning of the program.

void initialization(void) { }

// finishup -- Put items here which need to be taken care of when the

// program is finished.

void finishup(void) { }

/*-------------------- main loop algorithms -----------------------------*/

// mainloopalgorithms -- this function is called by the improv interface

// continuously while the program is running. The global variable t_time

// which stores the current time is set just before this function is

// called and remains constant while in this functions.

MidiMessage m;

void mainloopalgorithms(void) {

while (synth.getNoteCount() > 0) {

m = synth.extractNote();

synth.send(m.p0(), 127 - m.p1(), m.p2());

}

}

/*-------------------- triggered algorithms -----------------------------*/

// keyboardchar -- Put commands here which will be executed when a key is

// pressed on the computer keyboard.

void keyboardchar(int key) { }

/*------------------ end improvization algorithms -----------------------*/

The main loop of this example performs a simple inversion of the incoming MIDI note number and sends the result to the MIDI output port. The example is fairly trivial, but its significance lies in its real-time action, by which the inversion occurs at apparently the same time as the input.

The henontune example is one of my favorite demonstrations of Improv's capabilities. When henontune is run, it produces a melody generated by the Henon chaos mapping function. Incoming MIDI note-on and velocity values control the parameters of the mapping function, resulting in a new note stream that becomes cyclic, enters a chaotic sequence or simply stops. The code listing for henontune is too lengthy to print here, but it can be read on-line here.

Improv can be used to create your own MIDI programming environment, including non-real-time applications. The program's author has provided control processes not only for typical MIDI synthesizers but also for the Radio Baton and the Adams Stick. The package's documentation is excellent and tells you all you need to know in order to create your own Improv programs.

One of these days, I will dedicate a column to the numerous interesting music applications made with the Java programming language, one of which is the excellent jMusic audio/MIDI programming environment. Like Improv, the jMusic package provides a library of classes as well as a set of useful examples to demonstrate the library functions and capabilities. jMusic's API also lends the graphics power of Java to its resources, giving the programmer the opportunity to create attractive GUIs for his music coding endeavors.

An impressive amount of work has gone into jMusic development. The resulting environment is a powerful cross-platform resource for music composition, analysis and non-real-time performance. jMusic can be used to create real-time MIDI note streams or it can produce standard MIDI files. You can employ JavaSound for conversion to audio output, or you can use the MidiShare environment to provide jMusic with flexible MIDI I/O for routing output to external synthesizers, including softsynths.

The following example from the jMusic on-line tutorial demonstrates a basic program that creates a standard MIDI file as its output. The example is trivial, containing exactly one note, but it does indicate how a typical jMusic program is organized.

// First, access the jMusic classes:

import jm.JMC;

import jm.music.data.*;

import jm.util.*;

/*

* This is the simplest jMusic program of all.

* The equivalent to a programming language's 'Hello World'

*/

public final class Bing implements JMC{

public static void main(String[] args){

//create a middle C minim (half note)

Note n = new Note(C4, MINIM);

//create a phrase

Phrase phr = new Phrase();

//put the note inside the phrase

phr.addNote(n);

//pack the phrase into a part

Part p = new Part();

p.addPhrase(phr);

//pack the part into a score titled 'Bing'

Score s = new Score("Bing");

s.addPart(p);

//write the score as a MIDI file to disk

Write.midi(s, "Bing.mid");

}

}

Compiling this code with javac Bing.java results in a file named Bing.class. Running java Bing produces a Type 1 standard MIDI file that then can be played by any MIDI player or sequencer, including TiMidity, playmidi, MusE and Rosegarden4. The jMusic tutorial includes the following version of this program prepared for eventual output to an audio file in Sun's AU format. jMusic applications typically first create a MIDI stream that either is output as a standard MIDI file or is handed off to JavaSound for translation to audio.

import jm.JMC;

import jm.music.data.*;

import jm.util.*;

import jm.audio.*;

public final class SonOfBing implements JMC{

public static void main(String[] args){

Score score = new Score(new Part(new Phrase(new Note(C4, MINIM))));

Write.midi(score);

Instrument inst = new SawtoothInst(44100);

Write.au(score, inst);

}

}

Compiling this code with javac SonOfBing.java produces the SonOfBing.class file. Running java SonOfBing opens dialogs for saving first the MIDI output and then the AU file.

The next example is a more elaborate program written by jMusic developer Andrew Brown. The program is called NIAM--N Is After M--named in homage to the famous M music software written by David Zicarelli. Again, the code listing is too lengthy for inclusion here, but the source may be obtained from the Applications page of the jMusic Web site (see Resources). The screenshot in Figure 1 nicely shows off jMusic's GUI capabilities.

Up to four parts may be defined with separate settings for all parameters seen in Figure 1. Output can be saved as a MIDI file, and despite its non-real-time nature and its simple appearance. NIAM is a powerful little program. Many other jMusic-based applications can be found on the jMusic Web site, showing off capabilities such as melody generation by way of cellular automata, music theory assistance and converting standard music notation to a MIDI file.

jMusic's developers have gone to some pains to provide copious documentation and tutorial material for the new user. The jMusic Web site also provides pointers to general information about Java and its music and sound features, and it offers guidance to other relevant materials on computer music and related topics. If you already know Java, you should be able to jump right in to using jMusic, but with the excellent help available from its Web site even Java novices quickly will be write their own jMusic tools and applications.

Dr. Albert Graef describes his Q programming language as "a functional programming language based on term rewriting". According to the documentation, a Q program is "a collection of equations...used as rewriting rules to simplify expressions". If this description is a bit opaque for you, have no fear, the good doctor has supplied excellent documentation and many practical examples through which you quickly learn and appreciate the power of the Q language.

The Q package itself includes some basic MIDI routines, but the addition of the Q-Midi module greatly expands those capabilities. In the Q-Midi module, MIDI events are represented as symbolic data that should ease the formulation of programs for manipulating and processing MIDI messages and sequences. Q-Midi supports real-time MIDI I/O and the loading, editing and playing of standard MIDI files, thanks to it being based on the GRAME team's MidiShare, an excellent C library for cross-platform portable MIDI programming.

The following code is taken from Dr. Graef's PDF introduction to Q-Midi. It is an example of Q-Midi's use in algorithmic composition based on a method of composing with dice, devised by the 18th century composer Johann Kirnberger. Such methods were not new with Kirnberger, but his technique lends itself nicely to expression through Q-Midi.

/* import Q-Midi functions */

include midi;

/* read in MIDI files from a directory named "midi" */

def M = map load (glob "midi/*.mid");

/* create a map for file selection via the dice */

/* the numbers are the MIDI file fragments, */

/* i.e., 70.mid, 10.mid, 42.mid, etc. */

def T = map (map pred)

[// part A

[ 70, 10, 42, 62, 44, 72],

[ 34, 24, 6, 8, 56, 30],

[ 68, 50, 60, 36, 40, 4],

[ 18, 46, 2, 12, 79, 28],

[ 32, 14, 52, 16, 48, 22],

[ 58, 26, 66, 38, 54, 64],

// part B

[ 80, 20, 82, 43, 78, 69],

[ 11, 77, 3, 41, 84, 63],

[ 59, 65, 9, 45, 29, 7],

[ 35, 5, 83, 17, 76, 47],

[ 74, 27, 67, 37, 61, 19],

[ 13, 71, 1, 49, 57, 31],

[ 21, 15, 53, 73, 51, 81],

[ 33, 39, 25, 23, 75, 55]];

/* define and roll the dice */

dice N = listof die (I in nums 1 N);

die = random div 1000 mod 6;

/* make selections from map table */

polonaise D = foldl seq [] (A++A++B++A1)

where P = map (M!) (zipwith (!) T D),

A = take 6 P, B = drop 6 P, A1 = drop 2 A;

/* create sequences */

seq S1 S2 = S2 if null S1;

= S1++map (shift DT) S2 where (DT,_) = last S1;

shift DT (T,MSG) = (T+DT,MSG);

/* play the results of 14 throws */

kirnberger = play (polonaise (dice 14));

Kirnberger's method used the roll of the dice to determine which of 84 melodic fragments--the files in the midi directory--would be combined to create the phrases for a dance form called a polonaise. Fragments A are 6 measures long, fragments B are 8 bars long. The dice decide which fragments are combined, and the final line of the code plays the results.

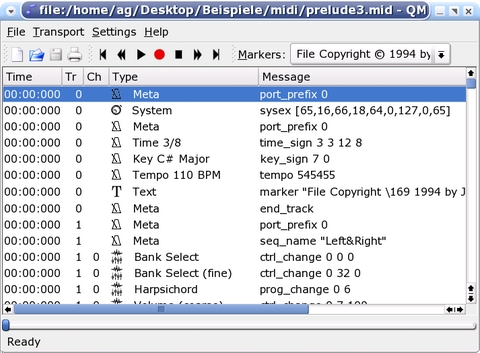

At this time there is no GUI for the Kirnberger code, but Figure 2 illustrates the possibilities of Q combined with KDE/Qt. The basic MIDI file player code is included as an example with the Q sources, but QMidiPlayer is a separate source package that requires a full installation of Q, the Q-Midi module and a recent version of KDE/Qt.

Its home page tells us that Common Music (CM) is:

an object-oriented music composition environment. It produces sound by transforming a high-level representation of musical structure into a variety of control protocols for sound synthesis and display. Common Music defines an extensive library of compositional tools and an API through which the composer can easily modify and extend the system.

Common Music's output types include scores formatted for the Csound and Common Lisp Music sound synthesis languages, for the Common Music Notation system and for MIDI as a file. It also can be rendered in real-time by way of MidiShare.

The following code creates a texture of self-similar motifs in an aural simulation of the well-known Sierpinski triangle :

;;; 8. Defining a recursive process (sierpinski) with five input args.

;;; sprout's second arg is the time to start the sprouted object.

;;;

(define (sierpinski knum ints dur amp depth)

(let ((len (length ints)))

(process for i in ints

for k = (transpose knum i)

output (new midi :time (now) :duration dur

:amplitude amp :keynum k)

when (> depth 1)

;; sprout a process on output note

sprout

(sierpinski (transpose k 12) ints

(/ dur len) amp (- depth 1))

wait dur)))

(events (sierpinski 'a0 '(0 7 5 ) 3 .5 4) "test.mid")

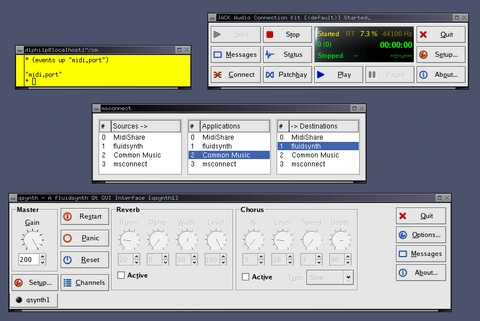

This fragment is located in the etc/examples/intro.cm file, a compendium of brief CM programs that introduce many useful basic features of the language. By utilizing the MidiShare system, you can render the fragment in real-time simply by changing test.mid to midi.port. Of course, you need a MidiShare-aware synthesizer to connect with, such as the Fluidsynth soundfont player launched with the following options :

fluidsynth --midi-driver=midishare --audio-driver=jack 8mbgmsfx.sf2

You also need to hook CM to the synthesizer. Figure 3 shows off CM connected to QSynth (a GUI for Fluidsynth) by way of the MidiShare msconnect utility. In this arrangement Fluidsynth is seen running with the JACK audio driver, hence the appearance of QJackCtl.

As a language-based environment, Common Music development is most at home in a Lisp-aware text editor, such as Emacs or X/Emacs. Users of Common Music for the Macintosh have long enjoyed the use of a graphic tool called the Plotter, which recently was ported to Linux. A new rendering GUI (Figure 4) has been added to the Common Music CVS sources that should make it easier to direct CM output to its various targets, including the Plotter and, of course, MIDI.

Common Music has been in development for many years, and its author, Rick Taube, has provided excellent documentation and tutorials for new and experienced users. Recently, Rick published an outstanding book about music composition with the computer, Notes From The Metalevel (see Resources below). His demonstration language of choice naturally is Common Music. The system is included on the book's accompanying CD, so new users can jump immediately into developing and testing their own CM code.

The venerable Csound music and sound programming language has long supported MIDI input as MIDI files for play by Csound instruments or by a MIDI device for playing a Csound instrument by way of external hardware--keyboard, sequencer, wind controller. Thanks primarily to the work of developer Gabriel Maldonado, Csound also is a useful programming language for real-time MIDI output.

Playing a Csound instrument, an orc in Csound-speak, through an external MIDI device is a fairly simple procedure. The following orc file prepares a MIDI-sensitive instrument :

instr 1

inum notnum ; receive MIDI note number

iamp ampmidi inum*50 ; use it to set amplitude scaling

kfreq cpsmidib ; convert MIDI note number to Hertz

frequency value

a1 oscil iamp,kfreq,1 ; three detuned oscillators, each using a

different waveform (see score below)

a2 oscil iamp,kfreq*1.003,2

a3 oscil iamp,kfreq*.997,3

asig = a1+a2+a3 ; audio signal equals sum of three oscillators

kenv linenr 1,.07,.11,.01 ; MIDI-controlled envelope for scaling output

out asig*kenv ; output equals oscillators shaped by envelope

endin

Notice that once MIDI data is captured by Csound, it can be utilized for any purpose within an instrument design.

This code provides the Csound score file, a sco in Csound-speak, for this instrument :

f1 0 8192 10 .1 0 .2 0 0 .4 0 0 0 0 .8 ; function tables for oscillator waveforms f2 0 8192 10 1 0 .9 0 0 .7 0 0 0 .4 f3 0 8192 10 .5 0 .6 0 0 .3 0 0 0 .9 f0 10000 ; placeholder to activate instrument for 10000 seconds e ; end score

In classic Csound you would run this orc/sco like so:

csound -o devaudio -M /dev/midi -dm6

where -o determines the output target, -M selects a MIDI input device and -dm6 sets the graphics and messaging levels. Instrument, score and launch options all can be rolled into a single CSD file, a format that unifies Csound's necessary components into one handy form.

Designing a Csound instrument involves the definition and connection of components called opcodes. A Csound opcode could be an oscillator (oscil), an envelope generator (linenr, linseg), a mathematic operator (=), a MIDI capture function (ampmid, cpsmidib) or any of the hundreds of other functions and capabilities available as Csound opcodes.

The following CSD-formatted Csound code demonstrates the moscil opcode, a MIDI output function. The instrument, instr 1, transmits a major scale as a series of MIDI note messages starting from a given note-number, 48, and rising according to the envelope curve described by the linseg opcode.

<CsoundSynthesizer>

<CsOptions>

;;; The -Q flag selects a MIDI output port.

;;; An audio output device is required.

-Q0 -o devaudio -dm6

</CsOptions>

<CsInstruments>

sr=44100 ; audio sampling rate, behaves as a

; tempo control for MIDI output

kr=44100 ; signal control rate (equals sr

; for MIDI output best results)

ksmps=1 ; samples per control period

nchnls=1 ; number of audio channels

instr 1

ival = 48

kchn = 0

knum linseg ival,1,ival+2,1,ival+4,1,ival+5,1,ival+7,1,ival+9,1,

ival+11,1,ival+12

kdur = .8

kpause = .2

moscil kchn,knum,44,kdur,kpause ; There is no audio

output stage.

endin

</CsInstruments>

<CsScore>

i1 0 8

e

</CsScore>

</CsoundSynthesizer>

The linseg envelope generator creates a multistage envelope in which each step corresponds to a scale degree. When this file is run with csound moscil-test.csd, a C major scale is played by whatever instrument is receiving data on the MIDI interface selected by the -Q option.

Csound has many opcodes dedicated to the reception, transmission and alteration of MIDI messages. The possibilities are intriguing: simultaneous MIDI input/output, given two physical ports; MIDI control of synthesis parameters; instruments with combined audio/MIDI output; and even MIDI control by way of Csound's FLTK-based GUI opcodes. With Csound, your imagination is the limit.

In my next column, I'll finish this MIDI tour with a look at some GUI-based experimental MIDI applications, including Tim Thompson's KeyKit, Jeffrey Putnam's Grammidity and Elody from GRAME, the MidiShare people. See you next month!

Dave Phillips is a musician, teacher and writer living in Findlay, Ohio. He has been an active member of the Linux audio community since his first contact with Linux in 1995. He is the author of The Book of Linux Music & Sound, as well as numerous articles in Linux Journal.